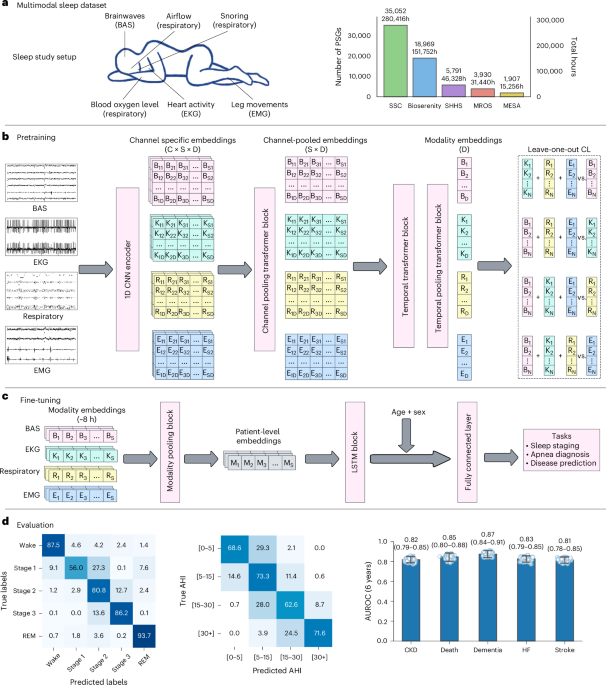

A multimodal sleep foundation model for disease prediction

Key Points:

- SleepFM, a large-scale foundation model pretrained on over 585,000 hours of PSG data, consistently outperforms two supervised baselines—a demographics-based MLP and an end-to-end PSG model without pretraining—across 1,041 disease phenotypes, with AUROC improvements ranging from 5% to 17%.

- SleepFM variants using pretrained embeddings achieve robust gains in predicting neurological, circulatory, endocrine/metabolic, respiratory, and mental disorders, notably excelling in conditions like dementia, myoneural disorders, atherosclerosis, diabetes complications, and respiratory insufficiency, demonstrating the model’s versatility beyond demographic predictors.

- The model’s self-supervised contrastive learning pretraining enables better generalization and stable performance even with

:max_bytes(150000):strip_icc()/GettyImages-22400154171-19eb2573d96647f8894478942b5721be.jpg)